Blog

Posts from our team

Latest

The "Beyond Code" Future

The Future Isn't Programmed. It's Grown.

10 August 2025

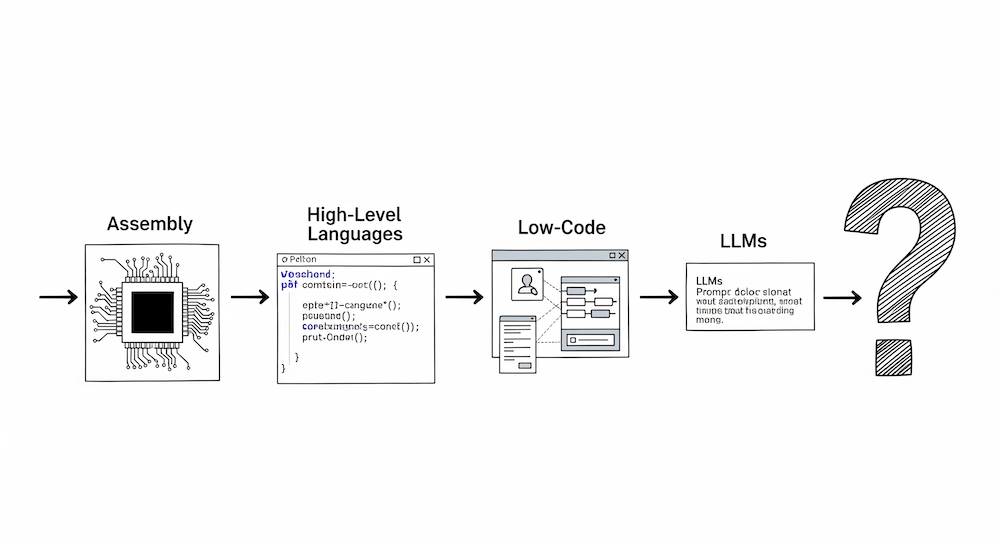

Over the last six posts, we've journeyed from the artisanal era of assembly code to the generative magic of LLMs. We've seen how each step in the evolution of software creation has increased our power, but also revealed new limitations—from the rigidity of traditional architecture, to the "glass ceiling" of Low-Code, to the contextual blindness of AI.

The Next Step: Unifying Logic, Structure, and Language

The Synthesis: Giving the Genie a Workshop.

14 July 2025

In this series, we've journeyed through the evolution of software creation. We've seen the trade-offs at each stage:

• 𝗧𝗿𝗮𝗱𝗶𝘁𝗶𝗼𝗻𝗮𝗹 𝗖𝗼𝗱𝗲: Powerful but slow and rigid.

• 𝗟𝗼𝘄-𝗖𝗼𝗱𝗲: Fast but limited by a "glass ceiling."

• 𝗟𝗟𝗠𝘀: Magically fast but lacking context and architectural stability

All posts

The LLM Revolution and its "Context" Problem

TThe Age of the Genie: The "Magic" and "Madness" of LLMs.

12 July 2025

We've now entered the most transformative stage in the evolution of software creation: the Age of the Genie. With Large Language Models (LLMs), we can simply state our wish in a text prompt.

Low/No-Code Promise and Its Glass Ceiling

The Age of Prefabrication: The Power and Limitations of Low/No-Code.

10 July 2025

In our journey through the evolution of software creation, we arrived at the "Age of Prefabrication” — the era of Low-Code and No-Code platforms. Their arrival was a game-changer, and their value proposition is undeniable: radical speed and accessibility.

The Age of the Factory: Building the Digital World (and Its Walls)

Following the "Artisan" era of programming, we entered the "Age of the Factory”.

09 July 2025

High-level languages like Java, C++, and Python, along with powerful frameworks, became our assembly lines. This was the industrial revolution of software.

The Evolution of Creation: From Code to Prompts

The story of IT technology is a story of abstraction.

07 July 2025

From the first stone tools to the microchip, our progress has been defined by our ability to build more powerful tools that hide underlying complexity. Software development is no different.

Let's trace this evolution:

Conclusion: A New Foundation for Intelligence

The Future Isn't Programmed. It's Grown.

03 July 2025

Over the lastposts, we've journeyed from the fundamental limitations of today's software to a new paradigm for building intelligent systems. We've seen how the dEO (Dynamic Executable Ontology) approach can: ...

High-Stakes Adaptation:

The Dual-Use dEO

Intelligence Under Pressure: The dEO Paradigm in High-Stakes Environments

02 July 2025

So far, we've explored how the dEO paradigm can transform software development and enterprise operations. But the true test of an adaptive intelligence is how it performs under extreme pressure, in chaotic environments where conditions change in an instant.

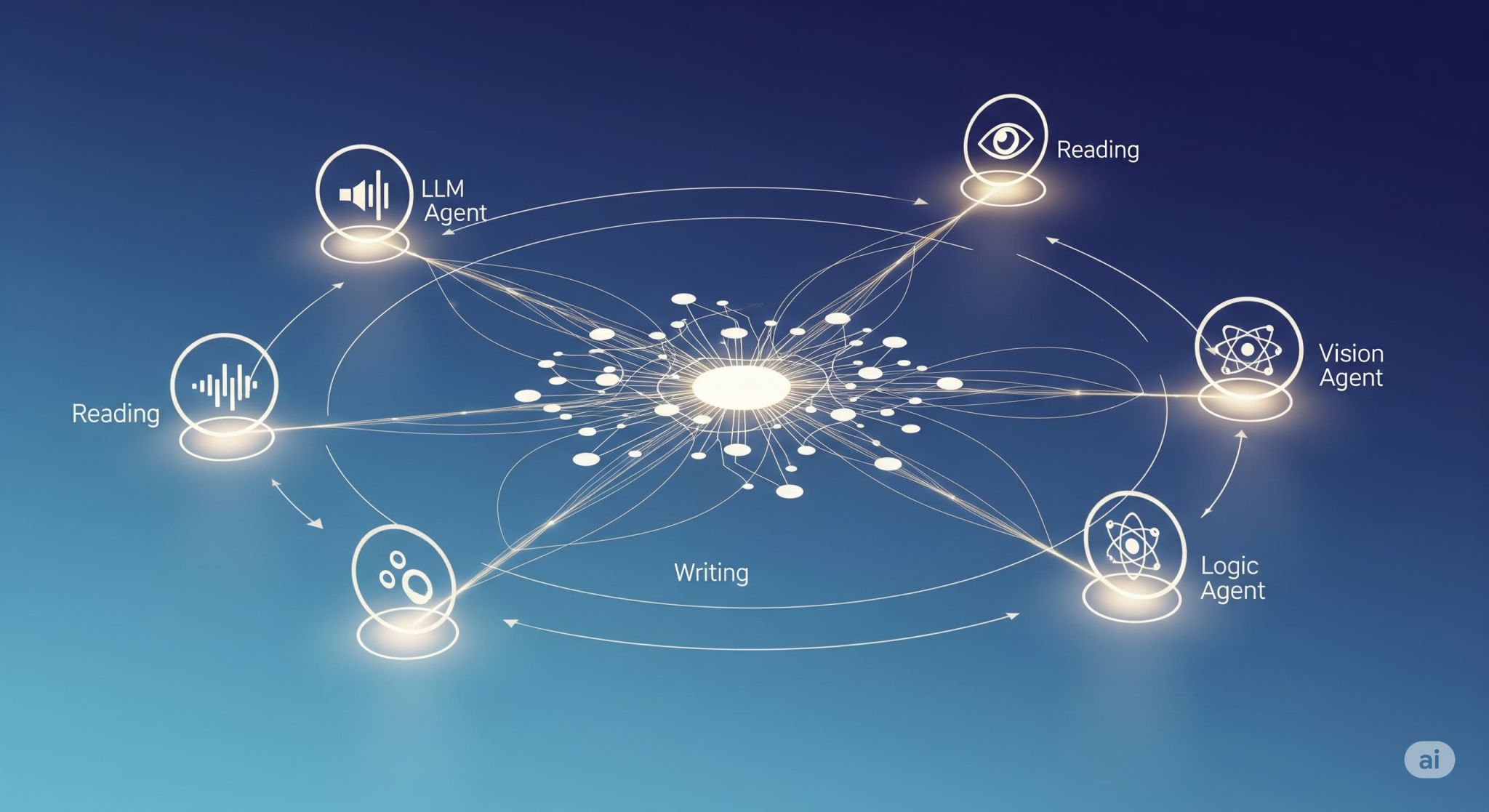

The Collaborative Intelligence of AI Agents and Robotics

A Shared Reality: Enabling True Teamwork

01 July 2025

The world of AI is experiencing a Cambrian explosion. We have powerful, specialized agents for language (LLMs), vision, data analysis, and more. But this has created a new problem: a digital "Tower of Babel." Each agent speaks its own language and has its own narrow view of the world ...

The Future of Personalized Care and Scientific Discovery

From Big Data to Living Knowledge

29 June 2025

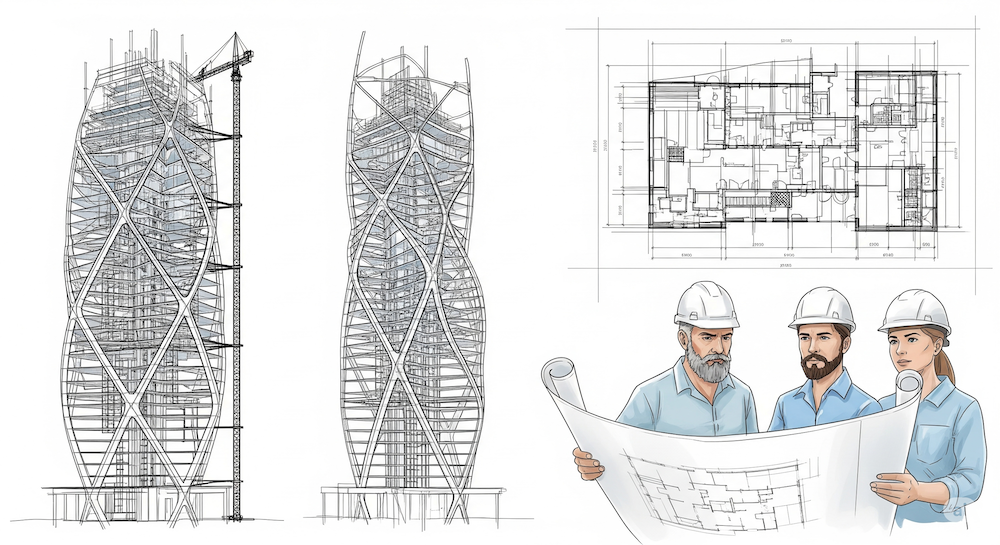

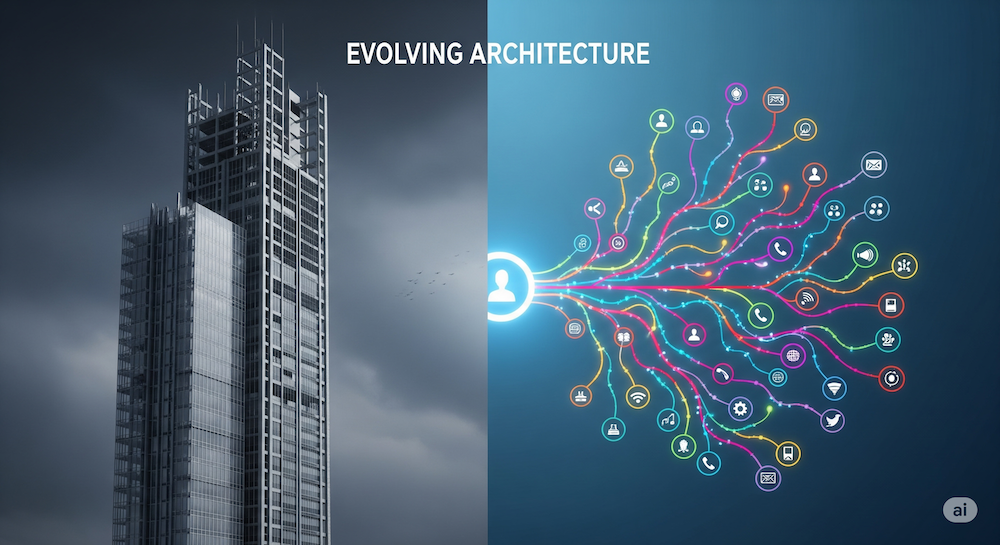

For decades, we’ve built software like we build skyscrapers: with a detailed architectural blueprint that tries to predict every need upfront. Even "agile" methods often operate within this "blueprint fallacy," adding features to a rigid foundation, which leads to our industry's most persistent headaches:

The Truly Smart Enterprise: A Business That Learns

Your business doesn't run on flowcharts. Why should its software?

28 June 2025

Every company has two versions of its processes. There's the "official" version, documented in rigid ERP systems and process diagrams. Then there's the way work actually gets done — the clever workarounds, the expert intuitions, (and Excell sheets!) and the on-the-fly adjustments ...

Overcoming Legacy Systems

How to Modernize a System You Can't Afford to Shut Down

26 June 2025

Every large enterprise has one: a critical, monolithic legacy system. It might run finance, logistics, or core operations. It’s brittle, impossible to update, and a black box to all but a handful of senior engineers. Yet, it’s too essential to fail.

Fixing Software Development Itself - An Evolving Architecture

From Rigid Blueprints to Living Systems.

25 June 2025

For decades, we’ve built software like we build skyscrapers: with a detailed architectural blueprint that tries to predict every need upfront. Even "agile" methods often operate within this "blueprint fallacy," adding features to a rigid foundation, which leads to our industry's most persistent headaches:

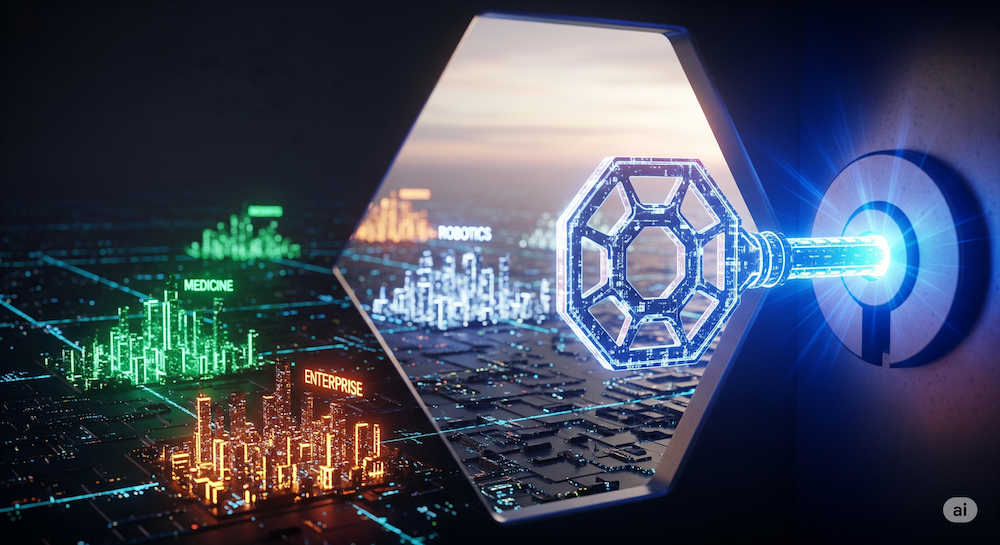

Beyond Evolving AI: What Can We Actually Build With It?

An Introduciton to the Application Landscape

24 June 2025

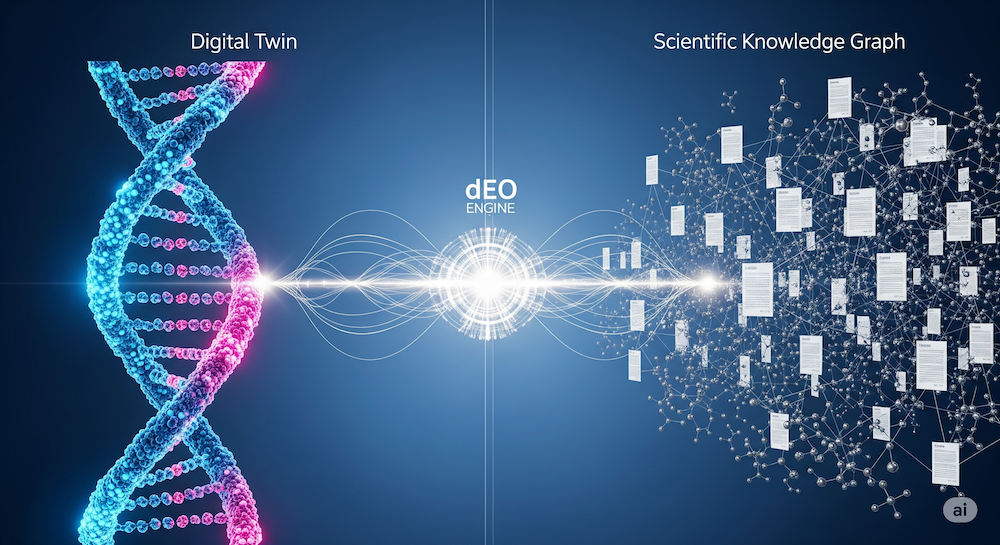

We explored a vision for a new kind of AI — one that evolves, learns from a handful of examples, and even creates its own hypotheses. We moved from the philosophical problem of rigid, deterministic software to the concept of a "living" system built on dEO engine.

Beyond Babel: Creating a Universal Language for AI Agents

The Next Frontier for AI Isn’t Just Smarter Agents - IT’s Agents That Can Collaborate

21 June 2025

In our previous series, we explored a new paradigm for building AI that can learn, create, and evolve. Now, let's turn to one of the biggest challenges in the field today: getting different AI systems to work together.

The dEO application examples:

Intelligent Evolving Devices

Smart Toys, Adaptive Objects, and the Future of OEM.

16 June 2025

We've journeyed from theory to a functional engine. Now, let's see what happens when the dEO (ex Machina, of course) engine is embedded into the world around us. How does this "beyond code" approach transform everyday objects?

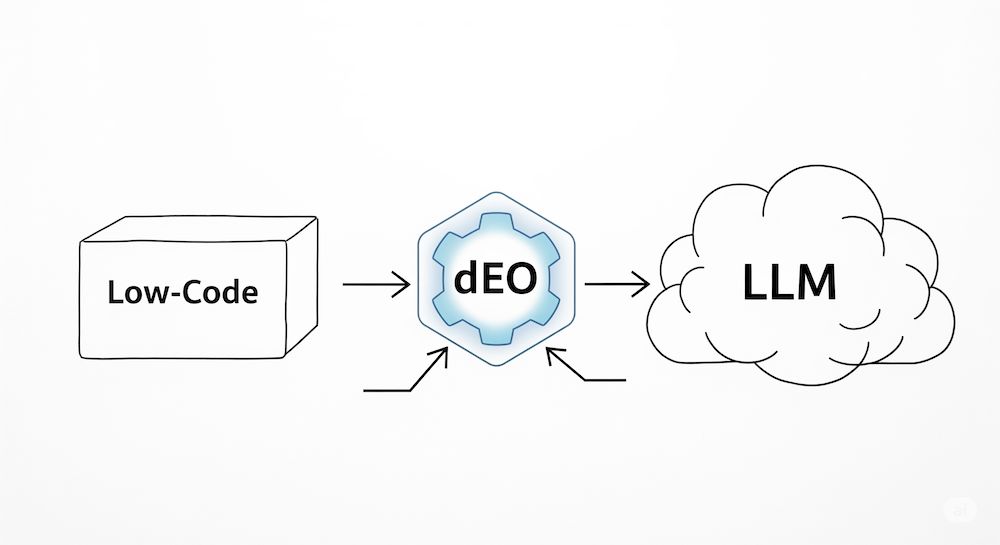

Supercharged Senses: The Role of LLMs in an Evolving AI

Where Do LLMs Fit In? Supercharging an Evolving AI.

15 June 2025

Given our vision for a new, evolving AI, it’s fair to ask: What about the incredible AI tools we already have? Where do Large Language Models (LLMs), generative models, and other machine learning systems fit into this picture?

From Theory to Engine: Introducing dEO Engine

The Engine for "Beyond Code" Intelligence: Introducing dEO (ex Machina, of course)

14 June 2025

A vision for an AI that evolves like a living system is inspiring. But how do you actually build it? How do you move from philosophy to a functional product? This is the bridge from theory to reality. At cognito.one, we have engineered the core technology.

The Vision: An AI That Evolves

Beyond Programming: The Dawn of a Truly Adaptive Intelligence

13 June 2025

We'll explore what it means to create a system that is not merely programmed, but can grow and evolve on its own, adapting to new challenges in ways its creators never explicitly designed. This is the path toward a fundamentally new kind of machine intelligence.

Read post

The Two Ways of Knowing: How a Machine Could Actually Create

Memorizing vs. Creating: Teaching Machines the Difference

12 June 2025

Can an AI have a true "eureka!" moment? Can it generate a genuinely new idea, not just an echo of its training data? To answer this, we need to explore two fundamentally different ways of knowing: learning from experience and creating from reason.

Read post

Beyond 1s and 0s: Building a System That Understands Reality

Seeing the World in Layers

11 June 2025

In our last post, we discussed unifying "code" and "data" into a single, living structure. But what is this structure made of? To build a truly adaptive AI, we first need a better way to represent the world. The reality our AI needs to understand isn't flat; it's made of layers.

A Radical Idea: What if Code Was as Flexible as Data?

The Wall Between Code and Data is Holding AI Back. Let's Tear It Down

10 June 2025

In our last post, we explored why even the most powerful AI can feel brittle.

The reason is determinism: systems are bound by a fixed set of rules. This brings us to a foundational principle of computer science that has defined software for over 70 years: the separation of code and data.

The Issue: The AI We Have vs. The AI We Need

Why Does AI Still Feel So Brittle? The Limits of Today's Computers

09 June 2025

Have you ever felt a flash of frustration with a "smart" device? Your music service recommends the same five artists endlessly. Your smart assistant answers a slightly unusual question with a completely nonsensical reply. For all their power, why do even the most advanced AI systems sometimes feel so rigid and fragile?

Read post